The main idea behind any ORM framework is to replace writing SQL with manipulating the objects. In the Java world, this means putting a layer on top of JDBC that will enable you to simply create a POJO, assign values to its fields, and tell it “Persist yourself in the database.” This is what Hibernate is for. In this article, we look into overview of how hibernate works and its main components. By going through this, you'll able to answers about hibernate in an interview.

Hibernate's primary feature is mapping from Java classes to database tables (and from Java data types to SQL data types). Hibernate also provides data query and retrieval facilities. It generates SQL calls and relieves the developer from manual result set handling and object conversion. Applications using Hibernate are portable to supported SQL databases with little performance overhead

Hibernate architecture

As you can see from following picture, how much of Hibernate you use depends on your needs. At its simplest level Hibernate provides a lightweight architecture that deals only with ORM. Full-blown usage of Hibernate fully abstracts most aspects of persistence, such as transaction, entity/query caching, and connection pooling.

How Hibernate Works

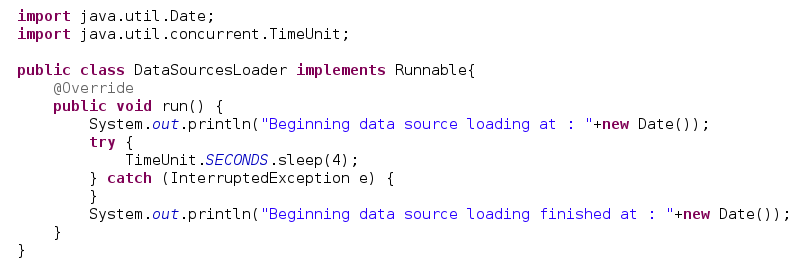

Hibernate’s success lies in the simplicity of its core concepts. At the heart of every interaction between your code and the database lies the Hibernate Session. Following image provides an overview of how Hibernate works and don't need any explanation if you have an idea about hibernate.

The heart of any Hibernate application is in its configuration. There are two pieces of configuration required in any Hibernate application: one creates the database connections, and the other creates the object-to-table mapping

Configure the Database Connection (.cfg.xml)

To create a connection to the database, Hibernate must know the details of our database, tables, classes, and other mechanics. This information is ideally provided as an XML file (usually named hibernate.cfg.xml) or as a simple text file with name/value pairs (usually named hibernate.properties).

In XML style. We name this file hibernate.cfg.xml so the framework can load this file automatically.

The following snippet describes such a configuration file. Because I am using MySQL as the database, the connection details for the MySQL database are declared in this hibernate.cfg.xml file:

The preceding properties can also be expressed as name/value pairs. For example, here’s the same information represented as name/value pairs in a text file titled

hibernate.properties:

hibernate.connection.driver_class = com.mysql.jdbc.Driver

hibernate.connection.url = jdbc:mysql://localhost:3307/JH

hibernate.dialect = org.hibernate.dialect.MySQL5Dialect

connection.url indicates the URL to which we should be connected, driver_class represents the relevant Driver class to make a connection, and the dialect indicates which database dialect we are using (MySQL, in this case). If you are following the hibernate.properties file approach, note that all the properties are prefixed with “hibernate” and follow a pattern—hibernate.* properties, for instance

Beyond providing the configuration properties, we also have to provide mapping files and their locations. As mentioned earlier, a mapping file indicates the mapping of object properties to the row column values. This mapping is done in a separate file, usually suffixed with .hbm.xml. We must let Hibernate know our mapping definition files by including an element mapping property in the previous config file, as shown here:

Create Mapping Definitions

Once we have the connection configuration ready, the next step is to prepare the table1.hbm.xml file consisting of object-table mapping definitions. The following XML snippet defines mapping for our Movie object against the TABLE1 table:

<hibernate-mapping>

<class name="com.java.latte.table1" table="TABLE1">

<id name="id" column="ID">

<generator class="native"/>

</id>

<property name="title" column="TITLE"/>

<property name="director" column="DIRECTOR"/>

<property name="synopsis" column="SYNOPSIS"/>

</class>

</hibernate-mapping>

There is a lot going on in this mapping file. The hibernate-mapping element holds all the class-to-table mapping definitions. Individual mappings per object are declare under the class element. The name attribute of the class tag refers to our POJO domain class com.java.latte.table1, while the table attribute refers to the table TABLE1 to which the objects are persisted.

The remaining properties indicate the mapping from the object’s variables to the table’s columns (e.g., the id is mapped to ID, the title to TITLE, director to DIRECTOR, etc.). Each object must have a unique identifier—similar to a primary key on the table. We set this identifier by implementing the id tag using a native strategy.

What does Hibernate do with this properties file?

The Hibernate framework loads this file to create a SessionFactory, which is thread-safe global factory class for creating Sessions. We should ideally create a single SessionFactory and share it across the application. Note that a SessionFactory is defined for one, and only one, database. For instance, if you have another database alongside MySQL, you should define the relevant configuration in hibernate.hbm.xml to create a separate SessionFactory for that database too.

The goal of the SessionFactory is to create Session objects. Session is a gateway to our database. It is the Session’s job to take care of all database operations such as saving, loading, and retrieving records from relevant tables. The framework also maintains at transnational medium around our application. The operations involving the database access are wrapped up in a single unit of work called a transaction. So, all the operations in that transaction are either successful or rolled back.

Keep in mind that the configuration is used to create a Session via a SessionFactory instance. Before we move on, note that Session objects are not thread-safe and therefore should not be shared across different classes.

The Hibernate Session

The Hibernate Session embodies the concept of a persistence service (or persistence manager) that can be used to query and perform insert, update, and delete operations on instances of a class mapped by Hibernate. In an ORM tool you perform all of these interactions using object oriented semantics; that is, you no longer are referring to tables and columns, but you use Java classes and object properties. As its name implies, the Session is a short-lived, lightweight object used as a bridge during a conversation between the application and the database. The Session wraps the underlying JDBC connection or J2EE data source, and it serves as a first-level cache for persistent objects bound to it.

The Session Factory

Hibernate requires that you provide it with the information required to connect to each database being used by an application as well as which classes are mapped to a given database. Each one of these database-specific configuration files, along with the associated class mappings, are compiled and cached by the SessionFactory, which is used to retrieve Hibernate Sessions. The SessionFactory is a heavyweight object that should ideally be created only once (since it is an expensive and slow operation) and made available to the application code that needs to perform persistence operations

Each SessionFactory is configured to work with a certain database platform by using one of the provided Hibernate dialects. The choice of Hibernate dialect is important when it comes to using databasespecific features like native primary key generation schemes or Session locking. At the time of this writing Hibernate (version 3.1) supports 22 database dialects. Each of the dialect implementations are in the package org.hibernate.dialect

The Hibernate Object Mappings

Hibernate specifies how each object state is retrieved and stored in the database via an XML configuration file. Hibernate mappings are loaded at startup and are cached in the SessionFactory. Each mapping specifies a variety of parameters related to the persistence lifecycle of instances of the mapped class such as:

Persistence Lifecycle of Hibernate

There are three possible states for a Hibernate mapped object. Understanding these states and the actions that cause state transitions will become very important when dealing with the more complex Hibernate problems.

In the transient state, the object is not associated with a database table. That is, its state has not been saved to a table, and the object has no associated database identity (no primary key has been assigned). Objects in the transient state are non-transactional, meaning that they do not participate in the scope of any transaction bound to a Hibernate Session. After a successful invocation of the save or saveOrUpdate methods an object ceases to be transient and becomes persistent. The Session delete method (or a delete query) produces the inverse effect making a persistent object transient.

Persistent objects are objects with database identity. (If they have been assigned a primary key but have not yet been saved to the database, they are referred to as being in the “new” state). Persistent objects are transactional, which means that they participate in transactions associated with the Session (at the end of the transaction the object state will be synchronized with the database).

A persistent object that is no longer in the Session cache (associated with the Session) becomes a detached object. This happens after a transaction completes, when the Session is closed, cleared, or if the object is explicitly evicted from the Session cache. Given Hibernate transparency when it comes to providing persistent services to an object, objects in the detached state can effectively become intertier transfer objects and in certain application architectures can replace the need to have DTOs (Data Transfer Objects).

I believe this has cleared you concept of how hibernate works and its components.

If you know anyone who has started learning java, why not help them out! Just share this post with them. Thanks for studying today!...

Hibernate's primary feature is mapping from Java classes to database tables (and from Java data types to SQL data types). Hibernate also provides data query and retrieval facilities. It generates SQL calls and relieves the developer from manual result set handling and object conversion. Applications using Hibernate are portable to supported SQL databases with little performance overhead

Hibernate architecture

As you can see from following picture, how much of Hibernate you use depends on your needs. At its simplest level Hibernate provides a lightweight architecture that deals only with ORM. Full-blown usage of Hibernate fully abstracts most aspects of persistence, such as transaction, entity/query caching, and connection pooling.

How Hibernate Works

Hibernate’s success lies in the simplicity of its core concepts. At the heart of every interaction between your code and the database lies the Hibernate Session. Following image provides an overview of how Hibernate works and don't need any explanation if you have an idea about hibernate.

The heart of any Hibernate application is in its configuration. There are two pieces of configuration required in any Hibernate application: one creates the database connections, and the other creates the object-to-table mapping

Let's see the basic introduction to all the component and there roles in hibernate.

Configure the Database Connection (.cfg.xml)

To create a connection to the database, Hibernate must know the details of our database, tables, classes, and other mechanics. This information is ideally provided as an XML file (usually named hibernate.cfg.xml) or as a simple text file with name/value pairs (usually named hibernate.properties).

In XML style. We name this file hibernate.cfg.xml so the framework can load this file automatically.

The following snippet describes such a configuration file. Because I am using MySQL as the database, the connection details for the MySQL database are declared in this hibernate.cfg.xml file:

The preceding properties can also be expressed as name/value pairs. For example, here’s the same information represented as name/value pairs in a text file titled

hibernate.properties:

hibernate.connection.driver_class = com.mysql.jdbc.Driver

hibernate.connection.url = jdbc:mysql://localhost:3307/JH

hibernate.dialect = org.hibernate.dialect.MySQL5Dialect

connection.url indicates the URL to which we should be connected, driver_class represents the relevant Driver class to make a connection, and the dialect indicates which database dialect we are using (MySQL, in this case). If you are following the hibernate.properties file approach, note that all the properties are prefixed with “hibernate” and follow a pattern—hibernate.* properties, for instance

Beyond providing the configuration properties, we also have to provide mapping files and their locations. As mentioned earlier, a mapping file indicates the mapping of object properties to the row column values. This mapping is done in a separate file, usually suffixed with .hbm.xml. We must let Hibernate know our mapping definition files by including an element mapping property in the previous config file, as shown here:

<hibernate-configuration>

<session-factory>

...

<mapping resource="table1.hbm.xml" />

<mapping resource="table2.hbm.xml" />

<mapping resource="table3.hbm.xml" />

</session-factory>

</hibernate-configuration>

<session-factory>

...

<mapping resource="table1.hbm.xml" />

<mapping resource="table2.hbm.xml" />

<mapping resource="table3.hbm.xml" />

</session-factory>

</hibernate-configuration>

Create Mapping Definitions

Once we have the connection configuration ready, the next step is to prepare the table1.hbm.xml file consisting of object-table mapping definitions. The following XML snippet defines mapping for our Movie object against the TABLE1 table:

<hibernate-mapping>

<class name="com.java.latte.table1" table="TABLE1">

<id name="id" column="ID">

<generator class="native"/>

</id>

<property name="title" column="TITLE"/>

<property name="director" column="DIRECTOR"/>

<property name="synopsis" column="SYNOPSIS"/>

</class>

</hibernate-mapping>

There is a lot going on in this mapping file. The hibernate-mapping element holds all the class-to-table mapping definitions. Individual mappings per object are declare under the class element. The name attribute of the class tag refers to our POJO domain class com.java.latte.table1, while the table attribute refers to the table TABLE1 to which the objects are persisted.

The remaining properties indicate the mapping from the object’s variables to the table’s columns (e.g., the id is mapped to ID, the title to TITLE, director to DIRECTOR, etc.). Each object must have a unique identifier—similar to a primary key on the table. We set this identifier by implementing the id tag using a native strategy.

What does Hibernate do with this properties file?

The Hibernate framework loads this file to create a SessionFactory, which is thread-safe global factory class for creating Sessions. We should ideally create a single SessionFactory and share it across the application. Note that a SessionFactory is defined for one, and only one, database. For instance, if you have another database alongside MySQL, you should define the relevant configuration in hibernate.hbm.xml to create a separate SessionFactory for that database too.

The goal of the SessionFactory is to create Session objects. Session is a gateway to our database. It is the Session’s job to take care of all database operations such as saving, loading, and retrieving records from relevant tables. The framework also maintains at transnational medium around our application. The operations involving the database access are wrapped up in a single unit of work called a transaction. So, all the operations in that transaction are either successful or rolled back.

Keep in mind that the configuration is used to create a Session via a SessionFactory instance. Before we move on, note that Session objects are not thread-safe and therefore should not be shared across different classes.

The Hibernate Session

The Hibernate Session embodies the concept of a persistence service (or persistence manager) that can be used to query and perform insert, update, and delete operations on instances of a class mapped by Hibernate. In an ORM tool you perform all of these interactions using object oriented semantics; that is, you no longer are referring to tables and columns, but you use Java classes and object properties. As its name implies, the Session is a short-lived, lightweight object used as a bridge during a conversation between the application and the database. The Session wraps the underlying JDBC connection or J2EE data source, and it serves as a first-level cache for persistent objects bound to it.

The Session Factory

Hibernate requires that you provide it with the information required to connect to each database being used by an application as well as which classes are mapped to a given database. Each one of these database-specific configuration files, along with the associated class mappings, are compiled and cached by the SessionFactory, which is used to retrieve Hibernate Sessions. The SessionFactory is a heavyweight object that should ideally be created only once (since it is an expensive and slow operation) and made available to the application code that needs to perform persistence operations

Each SessionFactory is configured to work with a certain database platform by using one of the provided Hibernate dialects. The choice of Hibernate dialect is important when it comes to using databasespecific features like native primary key generation schemes or Session locking. At the time of this writing Hibernate (version 3.1) supports 22 database dialects. Each of the dialect implementations are in the package org.hibernate.dialect

The Hibernate Object Mappings

Hibernate specifies how each object state is retrieved and stored in the database via an XML configuration file. Hibernate mappings are loaded at startup and are cached in the SessionFactory. Each mapping specifies a variety of parameters related to the persistence lifecycle of instances of the mapped class such as:

- Primary key mapping and generation scheme

- Object-field-to-table-column mappings

- Associations/Collections

- Caching settings

- Custom SQL, store procedure calls, filters, parameterized queries, and more

Persistence Lifecycle of Hibernate

There are three possible states for a Hibernate mapped object. Understanding these states and the actions that cause state transitions will become very important when dealing with the more complex Hibernate problems.

In the transient state, the object is not associated with a database table. That is, its state has not been saved to a table, and the object has no associated database identity (no primary key has been assigned). Objects in the transient state are non-transactional, meaning that they do not participate in the scope of any transaction bound to a Hibernate Session. After a successful invocation of the save or saveOrUpdate methods an object ceases to be transient and becomes persistent. The Session delete method (or a delete query) produces the inverse effect making a persistent object transient.

Persistent objects are objects with database identity. (If they have been assigned a primary key but have not yet been saved to the database, they are referred to as being in the “new” state). Persistent objects are transactional, which means that they participate in transactions associated with the Session (at the end of the transaction the object state will be synchronized with the database).

A persistent object that is no longer in the Session cache (associated with the Session) becomes a detached object. This happens after a transaction completes, when the Session is closed, cleared, or if the object is explicitly evicted from the Session cache. Given Hibernate transparency when it comes to providing persistent services to an object, objects in the detached state can effectively become intertier transfer objects and in certain application architectures can replace the need to have DTOs (Data Transfer Objects).

I believe this has cleared you concept of how hibernate works and its components.

If you know anyone who has started learning java, why not help them out! Just share this post with them. Thanks for studying today!...