You can directly create and manage threads in the application by creating Thread objects. However, if you want to abstract away the low-level details of multi-threaded programming, you can make use of the Executor interface. In this post, we'll how to use Executor, ExecutorService, Callable, Future interface and ThreadPool with examples.

Executor Interface

Executor is an interface that declares only one method.

An object that executes submitted Runnable tasks. This interface provides a way of decoupling task submission from the mechanics of how each task will be run, including details of thread use, scheduling, etc. An Executor is normally used instead of explicitly creating threads.This may not look like a big interface by itself, but its derived classes (or interfaces), such as ExecutorService, ThreadPoolExecutor, and ForkJoinPool, support useful functionality.

For example, rather than invoking new Thread(new(RunnableTask())).start() for each of a set of tasks, you might use:

Executor executor = anExecutor;

executor.execute(new RunnableTask1());

executor.execute(new RunnableTask2());

....

Executor Example

In MyExecutor, tasks are executed in some thread other than the caller's thread. The executor below spawns a new thread for each task. You can make another implementation where an executor can run the submitted task immediately in the caller's thread.

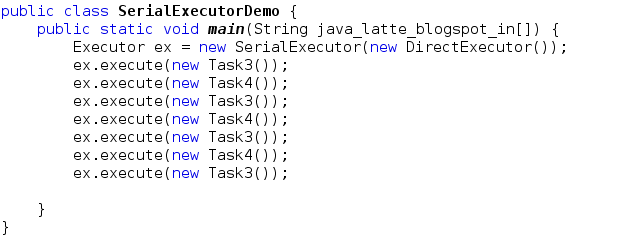

Many Executor implementations impose some sort of limitation on how and when tasks are scheduled. The executor below serializes the submission of tasks to a second executor, illustrating a composite executor.

In this example, we'll ArrayDeque for storing Runnable task

Thread Pool

A thread pool is a group of threads initially created that waits for jobs and executes them. The idea is to have the threads always existing, so that we won't have to pay overhead time for creating them every time

Callable interface

A task that returns a result and may throw an exception. Implementors define a single method with no arguments called call.

Because you cannot pass a Callable into a Thread to execute, you instead use the ExecutorService to execute the Callable object. The service accepts Callable objects to run by way of the submit() method

Difference between the Runnable and Callable interface

The Callable interface is similar to Runnable, in that both are designed for classes whose instances are potentially executed by another thread. A Runnable, however, does not return a result and cannot throw a checked exception.

To execute a task using the Callable object, you first create a thread pool. A thread pool is a collection of threads that can execute tasks. You create a thread pool using the Executors utility class. This class provides methods to get instances of thread pools, thread factories, etc.

ExecutorService interface

An Executor that provides methods to manage termination and methods that can produce a Future for tracking progress of one or more asynchronous tasks. The ExecutorService interface implements the Executor interface and provides services such as termination of threads and production of Future objects. Some tasks may take considerable execution time to complete. So, when you submit a task to the executor service, you get a Future object.

Interface Future

Future represents objects that contain a value that is returned by a thread in the future (i.e., it returns the value once the thread terminates in the "future"). You can use the isDone() method in the Future class to check if the task is complete and then use the get() method to fetch the task result. If you call the get() method directly while the task is not complete, the method blocks until it completes and returns the value once available.

In the next example, we create a Factorial program that implements Callable so that it can be passed to ExecutorService and get executed as a task

In this program, you have a Factorial class that implements Callable. Since the task is to compute the factorial of a number N, the task needs to return a result. Inside the Factorial class, you define the call() method that actually performs the task. In the CallableExample class, you first create an instance of the Factorial class. You then need to execute this task. For the sake of simplicity, you get a singled-threaded executor by calling the newSingleThreadExecutor() method in the Executors class. Note that you could use other methods such as newFixedThreadPool(nThreads) to create a thread pool with multiple threads depending on the level of parallelism you need.

Executor Interface

Executor is an interface that declares only one method.

An object that executes submitted Runnable tasks. This interface provides a way of decoupling task submission from the mechanics of how each task will be run, including details of thread use, scheduling, etc. An Executor is normally used instead of explicitly creating threads.This may not look like a big interface by itself, but its derived classes (or interfaces), such as ExecutorService, ThreadPoolExecutor, and ForkJoinPool, support useful functionality.

For example, rather than invoking new Thread(new(RunnableTask())).start() for each of a set of tasks, you might use:

Executor executor = anExecutor;

executor.execute(new RunnableTask1());

executor.execute(new RunnableTask2());

....

Executor Example

In MyExecutor, tasks are executed in some thread other than the caller's thread. The executor below spawns a new thread for each task. You can make another implementation where an executor can run the submitted task immediately in the caller's thread.

Many Executor implementations impose some sort of limitation on how and when tasks are scheduled. The executor below serializes the submission of tasks to a second executor, illustrating a composite executor.

In this example, we'll ArrayDeque for storing Runnable task

Thread Pool

|

| Source : Wikipedia |

Callable interface

A task that returns a result and may throw an exception. Implementors define a single method with no arguments called call.

Because you cannot pass a Callable into a Thread to execute, you instead use the ExecutorService to execute the Callable object. The service accepts Callable objects to run by way of the submit() method

Difference between the Runnable and Callable interface

The Callable interface is similar to Runnable, in that both are designed for classes whose instances are potentially executed by another thread. A Runnable, however, does not return a result and cannot throw a checked exception.

To execute a task using the Callable object, you first create a thread pool. A thread pool is a collection of threads that can execute tasks. You create a thread pool using the Executors utility class. This class provides methods to get instances of thread pools, thread factories, etc.

ExecutorService interface

An Executor that provides methods to manage termination and methods that can produce a Future for tracking progress of one or more asynchronous tasks. The ExecutorService interface implements the Executor interface and provides services such as termination of threads and production of Future objects. Some tasks may take considerable execution time to complete. So, when you submit a task to the executor service, you get a Future object.

Interface Future

Future represents objects that contain a value that is returned by a thread in the future (i.e., it returns the value once the thread terminates in the "future"). You can use the isDone() method in the Future class to check if the task is complete and then use the get() method to fetch the task result. If you call the get() method directly while the task is not complete, the method blocks until it completes and returns the value once available.

In the next example, we create a Factorial program that implements Callable so that it can be passed to ExecutorService and get executed as a task

In this program, you have a Factorial class that implements Callable. Since the task is to compute the factorial of a number N, the task needs to return a result. Inside the Factorial class, you define the call() method that actually performs the task. In the CallableExample class, you first create an instance of the Factorial class. You then need to execute this task. For the sake of simplicity, you get a singled-threaded executor by calling the newSingleThreadExecutor() method in the Executors class. Note that you could use other methods such as newFixedThreadPool(nThreads) to create a thread pool with multiple threads depending on the level of parallelism you need.

Once you get an ExecutorService, you submit the task for execution. ExecutorService abstracts details such as when the task is executed, how the task is assigned to the threads, etc. You get a reference to Future<Long> when you call the submit(task) method. From this future reference, you call the get() method to fetch the result after completing the task.

newFixedThreadPool constructor

Example : How Executor service is useful when you need to run run several threads at once.

ExecutorService is a full Producer-Consumer implementation

If the task is still executing when you call future.get(), this get() method will block until the task execution completes.

Advantage of ExecutorService

Executor may be a simple interface, but it forms the basis for a flexible and powerful framework for asynchronous task execution that supports a wide variety of task execution policies.

Advantage of ExecutorService

Executor may be a simple interface, but it forms the basis for a flexible and powerful framework for asynchronous task execution that supports a wide variety of task execution policies.

- Executor service manage thread in asynchronous way.

- Use callable to get the return result after thread completion.

- Shutdown provide capability for completion of all thread assigned work.

- It provides mechanisms for safely starting, closing down, submitting, executing, and blocking on the successful or abrupt termination of tasks.

- It provides a standard means of decoupling task submission from task execution, describing tasks as Runnable.

- The Executor implementations also provide lifecycle support and hooks for adding statistics gathering, application management, and monitoring.

- Rather than spending your time implementing the underlying infrastructure for parallelism, the concurrent framework allows you to instead focus on structuring tasks, dependencies, potential parallelism.

- It is easy to managing/scheduling several threads.

- This is especially useful if your program needs to run several threads at once. For example you want to execute two threads at a time.

At any point, at most n threads will be active processing tasks. If additional tasks are submitted when all threads are active, they will wait in the queue until a thread is available. If any thread terminates due to a failure during execution prior to shutdown, a new one will take its place if needed to execute subsequent tasks. The threads in the pool will exist until it is explicitly shutdown.

Example : How Executor service is useful when you need to run run several threads at once.

ExecutorService is a full Producer-Consumer implementation

ExecutorService is not only a thread pool, but it is a full Producer-Consumer implementation. This internal queue is in fact a thread-safe queue of Runnables (FutureTask to be precise) holding tasks you submit(). All the threads in the pool are blocked on that queue, waiting for tasks to be executed. When you submit() a task, exactly one thread will pick it up and run it. Of course submit() is not waiting for thread in the pool to finish processing.

On the other hand if you submit a huge number of tasks (or long-running ones) you might end-up with all threads in the pool being occupied and some tasks waiting in the queue. Once any thread is done with its task, it will immediately pick the first one from the queue.

ThreadFactory

ThreadFactory

ThreadFactory is an interface that is meant for creating threads instead of explicitly creating threads by calling new Thread().An object that creates new threads on demand. Using thread factories removes hardwiring of calls to new Thread, enabling applications to use special thread subclasses, priorities, etc.

For example, assume that you often create high-priority threads. You can create a MaxPriorityThreadFactory to set the default priority of threads created by that factory to maximum priority

With the use of ThreadFactory, you can reduce boilerplate code to set thread priority, name, thread-pool, etc.

ThreadLocalRandom Class

In concurrent programming, you’ll find that there is often a need to generate random numbers.

Using Math.random() is not efficient for concurrent programming. For this reason, the java.util.concurrent package introduces the ThreadLocalRandom class, which is suitable for use in concurrent programs. You can use ThreadLocalRandom.current() and then call methods such as nextInt() and nextFloat() to generate the random numbers.

Final example : In this program We create a class that sums the values from 1..N where N is a large number. We divide the task to sum the numbers to 10 threads. Once computation is complete, we add the results of all the threads

If you know anyone who has started learning Java, why not help them out! Just share this post with them.

Thanks for studying today!...

For example, assume that you often create high-priority threads. You can create a MaxPriorityThreadFactory to set the default priority of threads created by that factory to maximum priority

With the use of ThreadFactory, you can reduce boilerplate code to set thread priority, name, thread-pool, etc.

ThreadLocalRandom Class

In concurrent programming, you’ll find that there is often a need to generate random numbers.

Using Math.random() is not efficient for concurrent programming. For this reason, the java.util.concurrent package introduces the ThreadLocalRandom class, which is suitable for use in concurrent programs. You can use ThreadLocalRandom.current() and then call methods such as nextInt() and nextFloat() to generate the random numbers.

Final example : In this program We create a class that sums the values from 1..N where N is a large number. We divide the task to sum the numbers to 10 threads. Once computation is complete, we add the results of all the threads

If you know anyone who has started learning Java, why not help them out! Just share this post with them.

Thanks for studying today!...